How Does NUMA Architecture Impact Performance on High Core Count Servers?

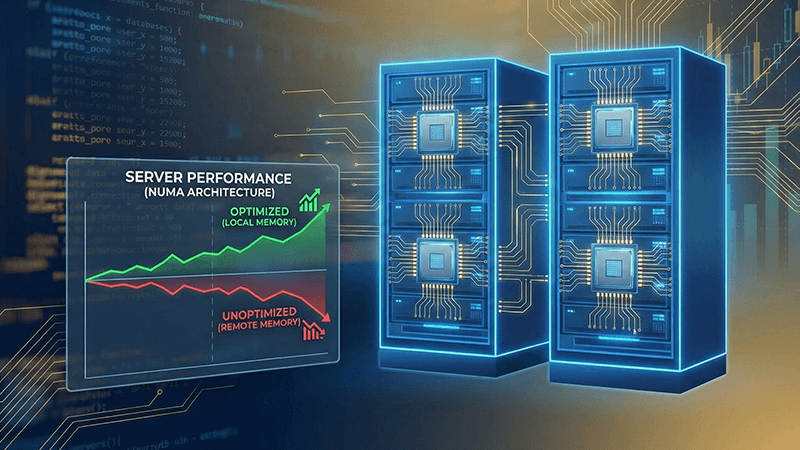

On modern servers with dozens or even hundreds of CPU cores, performance problems rarely announce themselves clearly. Average CPU utilization looks low, memory capacity is abundant, and network graphs appear healthy. Yet under real workloads, latency becomes unstable, throughput stops scaling linearly, and response times fluctuate as concurrency increases. These effects are not caused by a lack of hardware resources. They emerge from how NUMA server architecture governs memory access, CPU locality, and data movement on high core count servers.

NUMA architecture performance has become a decisive factor in how enterprise workloads behave in production, especially as server platforms continue to scale vertically.

NUMA Server Architecture in Real Terms

Uniform Memory Access architectures failed to keep pace with modern CPU design. As processors gained more cores, shared memory buses became congested, cache coherency traffic exploded, and scalability stalled. NUMA was introduced to solve this limitation.

In a NUMA server architecture, the system is divided into nodes. Each node consists of CPU cores and memory that are physically close to one another. Local memory access is fast and efficient. Memory attached to another node is still accessible, but at higher latency due to interconnect traversal.

This difference in access speed is the defining characteristic of NUMA and the foundation of its impact on server performance.

NUMA vs UMA Performance in Production Environments

NUMA vs UMA performance differences become meaningful only when systems scale. UMA provides uniform latency and simpler scheduling, but it cannot efficiently support high core counts. NUMA introduces complexity, but it enables multi socket systems with far greater aggregate performance.

In real environments, NUMA delivers superior scalability, but only when software, operating systems, and workloads are aware of node boundaries. When they are not, remote memory access increases and performance becomes inconsistent, even though resources appear underutilized.

Why High Core Count Servers Amplify NUMA Effects

As core counts rise, memory behavior overtakes raw CPU speed as the primary performance limiter. Each additional core increases pressure on caches and memory controllers. NUMA nodes localize this pressure, but only when workloads are aligned with the architecture.

On high core count servers, misaligned NUMA usage typically results in:

- Increased remote memory access

- Higher cache coherency overhead

- Interconnect congestion

- Reduced effective memory bandwidth per core

- Latency jitter under load

This explains why adding cores does not always increase throughput and can sometimes reduce application performance.

Memory Locality and Latency Sensitivity

Local memory access delivers consistent, low latency. Remote memory access introduces variability that grows under contention. This difference is especially visible in latency sensitive workloads such as transactional databases, APIs, and real time services.

Operating systems attempt to mitigate this through automatic NUMA balancing, migrating memory pages closer to the threads that use them. However, this process is reactive. Under bursty or rapidly changing workloads, memory placement often lags behind execution, creating short but frequent latency spikes that are difficult to diagnose.

NUMA Impact on Virtualization Performance

Virtualization platforms abstract hardware complexity, but NUMA behavior still applies. Hypervisors such as Hyper V, KVM, and VMware are NUMA aware, yet default configurations prioritize flexibility over optimal locality.

When virtual machines span multiple NUMA nodes, vCPUs often access memory attached to a different node, increasing latency and reducing throughput. Over time, this leads to inconsistent performance even when overall CPU usage remains low.

Best practice NUMA optimization in virtualized environments focuses on sizing and placement rather than raw density. Aligning vCPU counts and memory allocation with NUMA node boundaries often delivers larger performance gains than increasing hardware capacity.

NUMA and Database Workloads at Scale

Databases are among the most NUMA sensitive workloads in production. Enterprise platforms such as Microsoft SQL Server are explicitly designed to take advantage of NUMA architecture.

They attempt to allocate memory locally per node, bind worker threads to nearby cores, and reduce cross node contention. When this alignment is preserved, concurrency improves and latency stabilizes. When it is broken, symptoms include inconsistent query performance, reduced throughput, and inefficient CPU utilization despite available headroom.

This is why NUMA impact on server performance is repeatedly emphasized in database vendor best practices and architectural guidance.

Containers, Microservices, and NUMA Reality

Containers simplify deployment but do not eliminate hardware constraints. Without CPU pinning or memory affinity, containerized workloads can migrate across NUMA nodes, increasing cache misses and remote memory access.

In high throughput container environments, this behavior introduces jitter and noisy neighbor effects that are often mistaken for application issues. NUMA aware scheduling and resource isolation are increasingly used to maintain predictable performance on high core count servers.

PCIe, Storage, and Network Locality

NUMA affects more than memory. PCIe devices such as NVMe storage and network interface cards are physically attached to specific NUMA nodes. When I O processing occurs on remote nodes, latency increases and interconnect bandwidth is consumed unnecessarily.

For I O intensive workloads such as web hosting platforms, media delivery, and financial systems, aligning storage and network processing with local NUMA nodes reduces jitter and improves response time consistency.

CPU Architecture Choices and NUMA Design

Different CPU platforms expose NUMA topology differently. Some emphasize fewer, larger NUMA nodes with high memory bandwidth, while others favor extreme core density through multiple internal domains.

On high core count servers, memory channels per node are just as important as total core count. Without sufficient bandwidth, additional cores compete for the same resources, reducing effective performance.

This makes NUMA server architecture a core consideration during CPU selection, not something to address after deployment.

Operational Trade Offs and Long Term Strategy

NUMA aware optimization improves performance, but it also increases operational complexity. Teams must balance:

- Maximum consolidation versus predictable latency

- Automated scheduling versus explicit affinity

- Vertical scaling versus horizontal expansion

Many modern infrastructures favor simpler NUMA layouts combined with horizontal scaling to reduce long term tuning overhead and operational risk.

Dataplugs Dedicated Servers and NUMA Aware Infrastructure

Dedicated servers provide the control required to optimize NUMA behavior effectively. Full access to CPU topology, memory configuration, BIOS options, and operating system tuning allows workloads to align with hardware architecture rather than fight against it.

Dataplugs dedicated servers are built on modern enterprise platforms designed to support high core count CPUs, strong memory bandwidth, and stable high speed connectivity. This makes them well suited for NUMA sensitive workloads such as virtualization, databases, and performance critical applications where consistency and scalability matter.

With flexible configurations and full administrative control, Dataplugs enables teams to design NUMA aware environments that prioritize real world performance instead of relying on default assumptions.

When NUMA Becomes a Performance Advantage

NUMA architecture is not a limitation. It is the mechanism that allows modern servers to scale beyond the constraints of older designs. When understood and applied correctly, it delivers higher efficiency, better utilization of high core count servers, and more predictable behavior under sustained load.

When ignored, it becomes a hidden source of inefficiency that additional hardware cannot resolve.

Conclusion

NUMA architecture performance defines how modern high core count servers behave in real production environments. Memory locality, node boundaries, and workload placement matter more than raw specifications alone.

The true NUMA impact on server performance appears in latency consistency, scalability ceilings, and operational stability. Understanding NUMA vs UMA performance trade offs and aligning software with hardware topology is essential for building infrastructure that scales reliably.

For teams planning or optimizing NUMA sensitive workloads on dedicated infrastructure, having the right platform and control makes the difference. To explore dedicated server solutions designed for modern NUMA aware deployments, connect with Dataplugs via live chat or email at sales@dataplugs.com.