PCIe Gen 4 vs Gen 5: What Is the Impact on NVMe and GPU Servers?

In modern server stacks, performance degradation rarely announces itself clearly. Throughput looks healthy, utilization appears balanced, yet response times stretch as concurrency increases. NVMe drives report high speeds, GPUs show available compute, and still workloads slow under pressure. This gap between expected and observed performance increasingly originates from how data flows inside the server.

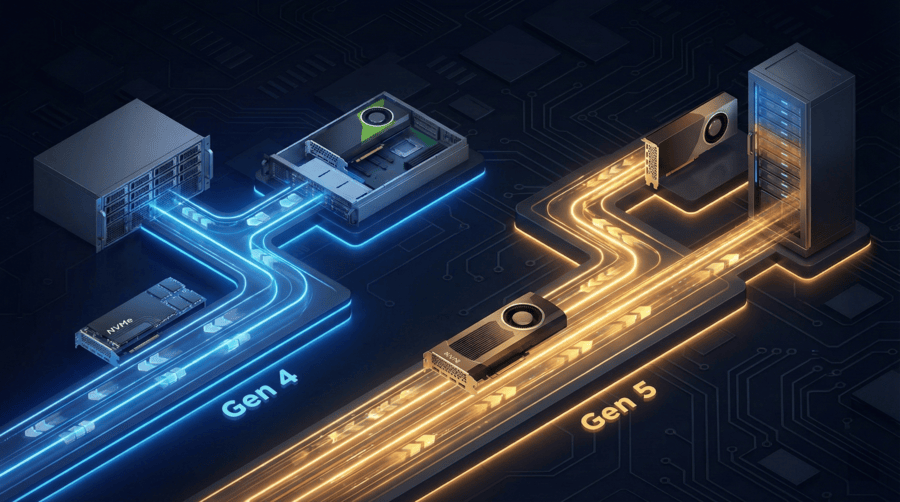

As storage bandwidth and accelerator density rise together, PCIe becomes the silent governor. The comparison between PCIe Gen 4 vs Gen 5 is therefore not about chasing newer standards, but about understanding how NVMe and GPU servers behave when real workloads collide on the same interconnect.

Why PCIe behavior defines modern server performance

PCIe is the internal transport layer of a server. Every NVMe IO, every GPU memory transfer, and every accelerator workload traverses PCIe lanes. Under light or isolated use, its limits remain hidden. Under sustained production load, PCIe design determines whether performance scales linearly or fragments.

PCIe Gen 4 servers doubled bandwidth over Gen 3 and removed many historical bottlenecks. For years, this was sufficient. Today, servers often combine multiple NVMe drives, GPUs, and high speed network interfaces. When these components operate simultaneously, contention emerges before individual devices reach saturation.

At that point, interconnect design matters more than raw component specifications.

NVMe performance comparison under sustained load

PCIe Gen 4 NVMe devices typically reach around 7 GB per second on x4 links. For single drive tasks, this is ample. Challenges arise when multiple NVMe drives serve databases, virtualization hosts, or AI pipelines concurrently while GPUs request data in parallel.

As queues deepen, latency becomes inconsistent. IO bursts overlap with GPU memory transfers. Storage remains fast on average, but tail latency grows unpredictable. For applications sensitive to response time consistency, this variability directly affects throughput and stability.

PCIe Gen 5 NVMe doubles per lane bandwidth again. More importantly, it reduces how long each transaction occupies the bus. In mixed workloads, this translates into smoother behavior and faster recovery from bursts rather than simply higher benchmark numbers.

GPU server bandwidth and interconnect pressure

In isolation, most GPUs do not saturate x16 PCIe links. This has led to the assumption that PCIe generation has limited impact on GPU performance. That assumption fails once GPUs exchange large datasets with CPUs or stream data directly from NVMe storage.

AI training, distributed inference, and multi GPU servers place sustained pressure on GPU server bandwidth. PCIe Gen 5 lowers transfer latency and increases headroom for concurrent GPU and storage activity, improving synchronization and utilization. The advantage appears most clearly when GPUs and NVMe operate together, not when tested independently.

Lane topology matters more than raw speed

PCIe generation alone does not guarantee performance. Lane topology determines whether GPUs and NVMe cooperate or compete.

Servers that connect GPUs directly to CPU lanes while routing NVMe through well provisioned paths avoid hidden contention. Poorly designed systems can negate the benefit of newer PCIe generations by forcing high bandwidth devices to share limited links.

This is why well architected PCIe Gen 4 servers can outperform poorly implemented Gen 5 systems in real workloads.

Thermal and signal integrity challenges at higher PCIe speeds

As PCIe speeds increase, electrical margins shrink. PCIe Gen 5 introduces tighter signal integrity requirements, higher thermal sensitivity, and stricter board level design constraints.

Without proper retimers, trace layouts, and airflow planning, systems may downshift link speeds or throttle under sustained load. These behaviors rarely appear in short benchmarks but surface during continuous production use.

Enterprise platforms that validate PCIe behavior under load maintain stable throughput. Platforms built without this validation may technically support Gen 5 while failing to sustain it.

Workload patterns determine PCIe generation value

Not every workload benefits equally from PCIe Gen 5. Virtualization platforms, transactional databases, and many web workloads remain constrained by memory behavior, CPU scheduling, or application logic rather than interconnect bandwidth.

PCIe Gen 4 servers continue to perform reliably where workload patterns are predictable and concurrency grows gradually. PCIe Gen 5 becomes valuable where multiple high throughput components interact continuously and where future scaling is expected.

Choosing between generations should follow workload interaction patterns, not marketing labels.

Future scaling and PCIe headroom

Infrastructure decisions rarely last only one year. NVMe drives continue to increase in throughput. GPUs continue to grow in memory bandwidth demand. Network interfaces advance toward higher speeds.

PCIe Gen 5 provides headroom that protects against future contention. This headroom does not always deliver immediate gains, but it preserves performance consistency as components evolve within the same platform lifecycle.

For environments planning multi year growth, interconnect capacity becomes a form of risk management.

How Dataplugs approaches PCIe architecture

Dataplugs treats PCIe as a structural foundation rather than a specification line item. Dedicated servers are designed with clear separation between GPU paths, NVMe storage, and auxiliary devices to prevent contention before it appears.

Platform selection prioritizes validated chipsets, stable lane routing, and cooling behavior under sustained load. This ensures that advertised PCIe capabilities remain available during real operation, not just during brief tests.

Dataplugs dedicated server unique advantage

Dataplugs dedicated servers are built around workload driven IO balance. GPU servers are provisioned with direct CPU lane access where accelerators demand consistent bandwidth. NVMe storage is deployed in layouts that preserve latency stability during burst traffic and concurrent access.

Rather than optimizing for short lived benchmarks, Dataplugs focuses on long term operational stability. PCIe Gen 4 servers are offered where they deliver predictable performance and cost efficiency. PCIe Gen 5 servers are deployed where higher NVMe concurrency, GPU density, and future scaling justify the additional interconnect capacity.

This approach allows customers to deploy dedicated servers that behave consistently under production load, whether running databases, virtualization platforms, AI workloads, or storage intensive applications. As requirements grow, the PCIe architecture scales without forcing disruptive redesigns.

Choosing the right PCIe path forward

The decision between PCIe Gen 4 vs Gen 5 should be guided by how NVMe performance and GPU bandwidth intersect under real workloads. Peak specifications matter less than sustained behavior.

In modern data centers, interconnect design increasingly determines whether systems scale smoothly or degrade under pressure. Dataplugs supports this reality with dedicated servers engineered for balanced PCIe architecture, stable NVMe performance, and reliable GPU throughput over time.

For expert guidance on selecting a dedicated server aligned with your workload and growth strategy, the Dataplugs team is available via live chat or email at sales@dataplugs.com.