Best Programming Languages for Web Crawling in 2026

Choosing the right programming language for web crawling in 2026 is a decision that shapes how effectively you can gather, analyze, and act on web data. The requirements for modern web crawler development go far beyond simple HTTP requests and HTML parsing. Today’s web crawlers must handle dynamic JavaScript content, avoid detection and blocking, scale across thousands of concurrent requests, and integrate with cloud-based storage and analytics. Understanding the nuances of each language’s capabilities and ecosystem is essential for building resilient and scalable crawlers that stand up to real-world challenges.

Understanding the Foundations: What Is Web Crawling?

Web crawling refers to the automated process of systematically traversing websites, discovering URLs, and capturing web content for further analysis or indexing. A web crawler typically starts with a set of seed URLs, fetches the pages, extracts links, and continues recursively until it maps out the desired scope of the web. This process underpins everything from search engine indexing and price monitoring to compliance audits and competitive intelligence.

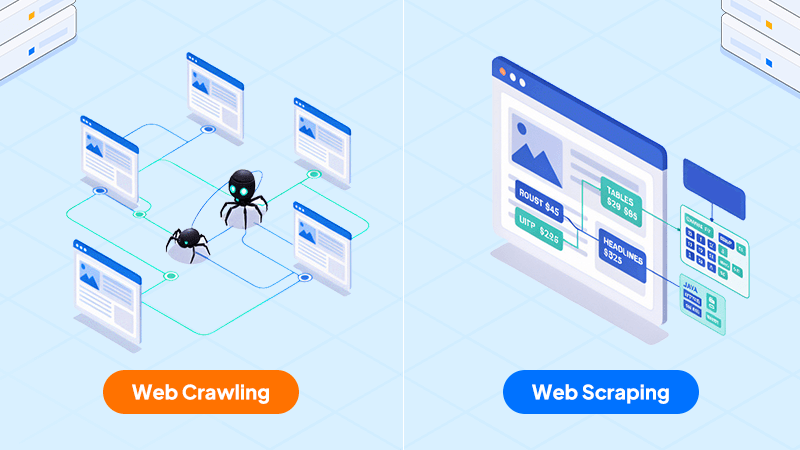

Web Crawling vs Web Scraping: Technical and Functional Differences

It’s important to distinguish web crawling from web scraping. Web crawling focuses on automated discovery and collection of web pages and their relationships. Web scraping, however, is the extraction of specific data points—such as prices, headlines, or product specs—from the retrieved content. Most enterprise data pipelines involve both: crawlers to discover and download content at scale, and scrapers to parse and structure the data for downstream processing.

In-Depth Comparison: Top Programming Languages for Web Crawler Development

Python:

Python is widely regarded for its balance of simplicity and power in web crawler development. For implementation, frameworks like Scrapy provide asynchronous, event-driven crawling out-of-the-box. Scrapy’s selectors (XPath, CSS) allow precise extraction of links and data, and its built-in scheduler manages a distributed queue across multiple processes or servers. For crawling JavaScript-heavy sites, Python integrates with Selenium or Playwright to control headless browsers. In practice, production deployments often combine Scrapy with Redis or RabbitMQ for distributed URL management, and pipelines for storing data in PostgreSQL or MongoDB. Error handling, retries, and throttling are all handled within the framework, making Python a top choice for both rapid prototyping and large-scale deployments.

Java:

Java’s strengths in crawler development lie in its robustness, true multithreading, and scalability for enterprise environments. Tools like Apache Nutch enable distributed crawling over Hadoop, with plugins for custom URL filtering, link analysis, and real-time deduplication. Java’s Jsoup is a popular library for parsing and cleaning HTML, supporting CSS selectors for fast DOM traversal. Complex implementations often use thread pools to concurrently process URL queues, and integrate with enterprise authentication systems for crawling protected resources. For long-running crawlers, Java’s garbage collection and memory management reduce leaks, and JVM monitoring tools provide real-time insight into performance bottlenecks. Java is often the language of choice when uptime, throughput, and integration with legacy systems are critical.

JavaScript (Node.js):

Node.js offers a unique advantage for crawling sites built with modern JavaScript frameworks like React or Angular. Puppeteer and Playwright provide browser automation, enabling crawlers to wait for AJAX requests, interact with page elements, and extract DOM content after rendering. Real-world implementations leverage Node’s async/await syntax to manage thousands of parallel connections efficiently. Queue management is handled by modules like Bull or Kue, while Cheerio serves for fast HTML parsing on static pages. Developers often deploy Node-based crawlers in containers (Docker) for easy scaling, and use logging/metrics platforms to monitor crawler health and response times. For rapid adaptation to changing site structures and dynamic content, Node.js stands out.

Go (Golang):

Go is increasingly selected for large-scale, high-concurrency crawling projects. Its goroutines allow developers to spin up tens of thousands of lightweight threads for simultaneous URL processing, and channels simplify communication between crawling, parsing, and storage components. The Colly framework offers robust middleware support for rotating proxies, managing cookies, and handling retries. In distributed settings, Go crawlers are often paired with message queues (like NATS or Kafka) for managing task distribution and backpressure. Compiled binaries make deployment fast and resource-efficient, and Go’s static typing catches bugs at compile time. For operations needing predictable latency and high throughput, Go’s performance is a significant advantage.

Ruby:

Ruby, featuring gems like Nokogiri for XML/HTML parsing and Mechanize for session management, excels in scenarios where code readability and quick iteration are priorities. Implementation typically involves using Mechanize to manage cookies, headers, and navigation, while Nokogiri parses the content for links and data. Ruby’s convention-over-configuration approach, familiar from Rails, allows for concise and easily maintainable crawler scripts, especially for smaller or specialized data collection tasks. While Ruby is less common for massive, distributed crawling, it shines for rapid development of tools and scripts where developer productivity is paramount.

PHP and C++:

PHP is sometimes used for embedding crawlers within web applications or for server-side data integration. Libraries like Guzzle (for HTTP) and Symfony DomCrawler facilitate building simple crawlers, but PHP’s lack of native multithreading and limited async support make it less suitable for high-scale operations. C++ is reserved for use cases demanding absolute control over memory and performance, such as custom crawling engines for specialized hardware or environments with strict latency requirements. Implementation in C++ often involves libcurl for network requests and Gumbo or libxml2 for parsing, but at the cost of significantly greater development and maintenance effort.

Optimizing Web Crawling with Dedicated Infrastructure

Regardless of the language, the underlying infrastructure is instrumental in determining crawler performance and reliability. Dedicated servers, such as those offered by Dataplugs, provide the bandwidth, CPU, and memory isolation needed for sustained, high-throughput operations. For teams deploying distributed or resource-intensive crawlers, dedicated environments enable fine-tuned control over firewall rules, DDoS mitigation, and storage options—crucial for compliance and operational continuity.

Deploying on dedicated hardware also simplifies the implementation of security measures, such as restricting outbound connections and monitoring traffic patterns, helping reduce the risk of crawler detection or blocking.

Conclusion

Selecting the best programming language for web crawling in 2026 is about aligning technical strengths with project requirements—whether that means Python’s rapid development, Java’s scalability, Node.js’s dynamic handling, or Go’s concurrency. Real-world crawler development calls for more than choosing a language: it requires thoughtful architecture, robust error handling, and reliable infrastructure. To support your web crawling projects with enterprise-grade performance and security, consider deploying on dedicated servers. For personalized advice or to discuss infrastructure tailored for your next crawler, contact Dataplugs via live chat or email sales@dataplugs.com—our team is ready to help your data initiatives reach their full potential.