Deploying GPU Passthrough in Virtualized Environments for AI

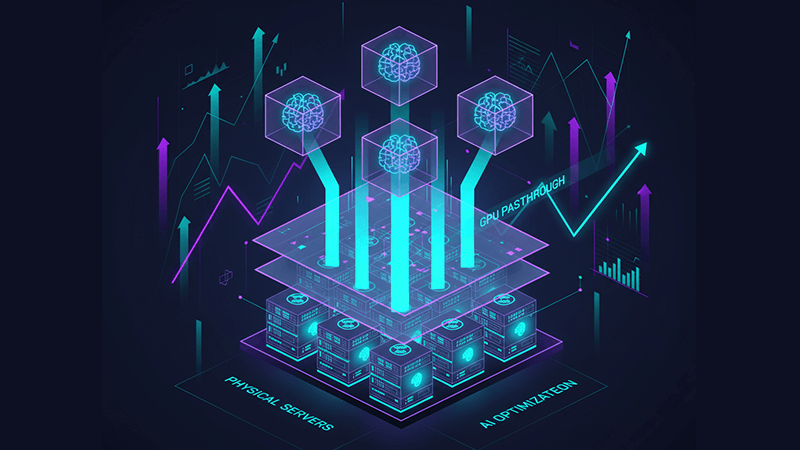

AI platforms struggle when GPU capacity exists but cannot be used where and when it is needed. One virtual machine monopolizes an accelerator while another stalls, training jobs fluctuate in runtime due to shared scheduling, and virtualization layers quietly erode the performance expected from high cost GPUs. In these scenarios, infrastructure does not fail outright. It underdelivers. GPU passthrough virtualization addresses this by realigning virtualized environments with the real execution characteristics of AI workloads.

This article goes deep into how GPU passthrough for AI workloads works in practice, how it compares to shared GPU models, and how infrastructure choices shape long term scalability and performance.

Why AI Workloads Expose the Limits of Traditional GPU Virtualization

AI training and advanced inference behave very differently from conventional enterprise applications. Large language models, recommender systems, and computer vision pipelines depend on uninterrupted access to GPU memory, predictable PCIe bandwidth, and stable latency across long execution windows. Even minor contention at the GPU scheduler or memory layer can multiply training times or introduce jitter into real time inference.

Virtualized GPU for AI training often starts with shared approaches such as vGPU or time slicing. These models are effective for development environments, testing, and moderate inference workloads where utilization efficiency matters more than absolute performance. However, as models grow in parameter count and memory footprint, the abstraction layer becomes the constraint rather than the GPU silicon itself.

GPU passthrough virtualization removes this constraint by mapping a physical GPU directly into a virtual machine. The hypervisor no longer mediates GPU execution or memory access. The guest operating system interacts with the GPU as if it were running on bare metal, restoring deterministic behavior and near native performance.

GPU Passthrough Virtualization vs Virtual GPU Models

The decision between GPU passthrough and shared GPU virtualization is architectural, not philosophical. Each serves a different purpose.

Virtual GPU models, including NVIDIA vGPU and software based time slicing, focus on flexibility and density. Multiple virtual machines share a single GPU, making these models suitable for:

- Virtual desktop infrastructure and remote visualization

- Development and CI pipelines

- Lightweight or bursty inference workloads

- Multi user environments where fairness matters more than throughput

GPU passthrough virtualization focuses on isolation and performance. A single virtual machine owns the entire GPU, eliminating memory overcommit, scheduling contention, and noisy neighbor effects. This makes passthrough suitable for:

- AI model training and fine tuning

- High performance computing and simulation

- Memory intensive workloads that exceed vGPU profiles

- Latency sensitive inference pipelines

In real world AI platforms, both approaches often coexist. Passthrough GPUs handle the most demanding workloads, while shared GPUs serve experimentation and scale out inference.

PCIe GPU Passthrough and Hardware Architecture

PCIe GPU passthrough relies on hardware assisted virtualization technologies such as Intel VT d or AMD Vi, commonly referred to as IOMMU. These features allow PCIe devices to be isolated and mapped directly into a virtual machine’s address space.

For stable and high performance passthrough, several hardware factors matter:

- CPU and chipset must support IOMMU

- BIOS or UEFI must expose clean IOMMU groupings

- Sufficient PCIe lanes must be available to avoid bandwidth contention

- NUMA topology must align CPUs, memory, and GPUs correctly

NUMA alignment is often underestimated. If a GPU is attached to a different CPU socket than the memory and cores driving the workload, cross socket traffic introduces latency that reduces effective throughput. In AI training, this can offset much of the benefit of passthrough.

Power delivery and cooling are equally critical. GPUs assigned via passthrough often operate at sustained high utilization. Server grade platforms are designed for this thermal and electrical profile, while consumer systems may throttle or destabilize under continuous load.

Hypervisor Support: VMware, KVM, and OpenStack

GPU passthrough is supported across major hypervisors, but each implementation has practical differences.

GPU passthrough VMware deployments typically use DirectPath I O. ESXi provides a mature ecosystem and predictable behavior, but passthrough configurations are largely static. Live migration, snapshots, and some high availability features are limited once a GPU is attached. This model fits fixed capacity AI training nodes rather than highly dynamic environments.

GPU passthrough KVM environments use VFIO and IOMMU at the Linux kernel level. KVM offers deep control over device binding, kernel parameters, and NUMA placement. This makes it popular for research clusters, private AI clouds, and environments where customization matters.

OpenStack builds on KVM passthrough using Nova PCI passthrough scheduling and flavor based allocation. While configuration complexity is higher, OpenStack enables multi tenant GPU passthrough at scale with strong isolation and quota control, making it suitable for shared enterprise AI platforms.

Hyper V supports GPU passthrough through Discrete Device Assignment, but requires strict VM configurations and imposes limitations on memory management and clustering. This makes it viable for specific use cases but less flexible at large scale.

Operational Tradeoffs of GPU Passthrough for AI Workloads

GPU passthrough introduces constraints that must be designed around intentionally.

Virtual machines with passthrough GPUs generally cannot be live migrated. Snapshot support may be limited. Hardware maintenance requires workload scheduling rather than transparent failover. Driver updates must be coordinated between host and guest systems.

For AI workloads, these tradeoffs are often acceptable. Training jobs are long running and stateful. Inference services are typically replicated at the application layer rather than relying on VM level availability. In return, teams gain predictable performance, simpler debugging, and clear resource ownership.

Scaling AI Platforms Beyond a Single GPU Model

Adopting GPU passthrough does not lock an organization into a rigid architecture. In many cases, it establishes a performance baseline.

Teams often start with passthrough for critical training workloads to achieve bare metal level performance. As usage grows, they introduce additional layers such as container orchestration, MIG based GPU partitioning, or shared GPU pools for less demanding tasks.

Modern AI infrastructure increasingly blends:

- GPU passthrough for training and fine tuning

- Shared or partitioned GPUs for inference and development

- Orchestration platforms such as Kubernetes or OpenStack for scheduling

The key is aligning GPU access models with workload intent rather than forcing all workloads into a single virtualization pattern.

Dataplugs GPU Dedicated Servers for Passthrough Architectures

GPU passthrough virtualization is highly sensitive to infrastructure quality. Oversubscribed platforms, opaque PCIe layouts, and restricted firmware access introduce variability that undermines performance gains.

Dataplugs GPU dedicated servers provide an environment where passthrough can be deployed without compromise. These servers are designed to expose full control over compute, memory, and PCIe topology, allowing engineers to tune systems specifically for AI workloads.

Key characteristics that support GPU passthrough architectures include:

- Dedicated access to enterprise grade NVIDIA GPUs

- Full PCIe bandwidth without oversubscription

- Support for IOMMU, VFIO, and custom kernel configurations

- Predictable NUMA layouts suitable for AI training

- High bandwidth networking for data intensive pipelines

Because resources are not shared with other tenants, GPU passthrough for AI workloads behaves consistently over time. This allows teams to combine passthrough, shared GPU models, and orchestration frameworks on the same platform as requirements evolve.

Conclusion

Deploying GPU passthrough in virtualized environments for AI is about restoring alignment between workload behavior and hardware capability. By assigning GPUs directly to virtual machines, organizations eliminate unnecessary abstraction, regain predictable performance, and unlock the full value of their accelerator investments.

When paired with dedicated infrastructure that offers full control over PCIe topology, memory placement, and system tuning, GPU passthrough becomes a stable foundation rather than a fragile exception. For AI teams designing training clusters, inference platforms, or hybrid GPU environments, this approach enables efficient utilization today while preserving flexibility for future growth.

To learn more about GPU dedicated servers designed for AI passthrough architectures, visit Dataplugs or connect with the team via live chat or email at sales@dataplugs.com.